AdaBoost, short for Adaptive Boosting, is a machine learning algorithm developed by Freund and Schapire in 1996. It is an algorithm used to construct classifiers in a supervised learning setting. An AdaBoost classifier begins with a weak learner, which is then iteratively improved by giving more weight to instances that the weak learner classifies incorrectly. By doing this, the algorithm can develop a strong classifier from a set of weak learners.

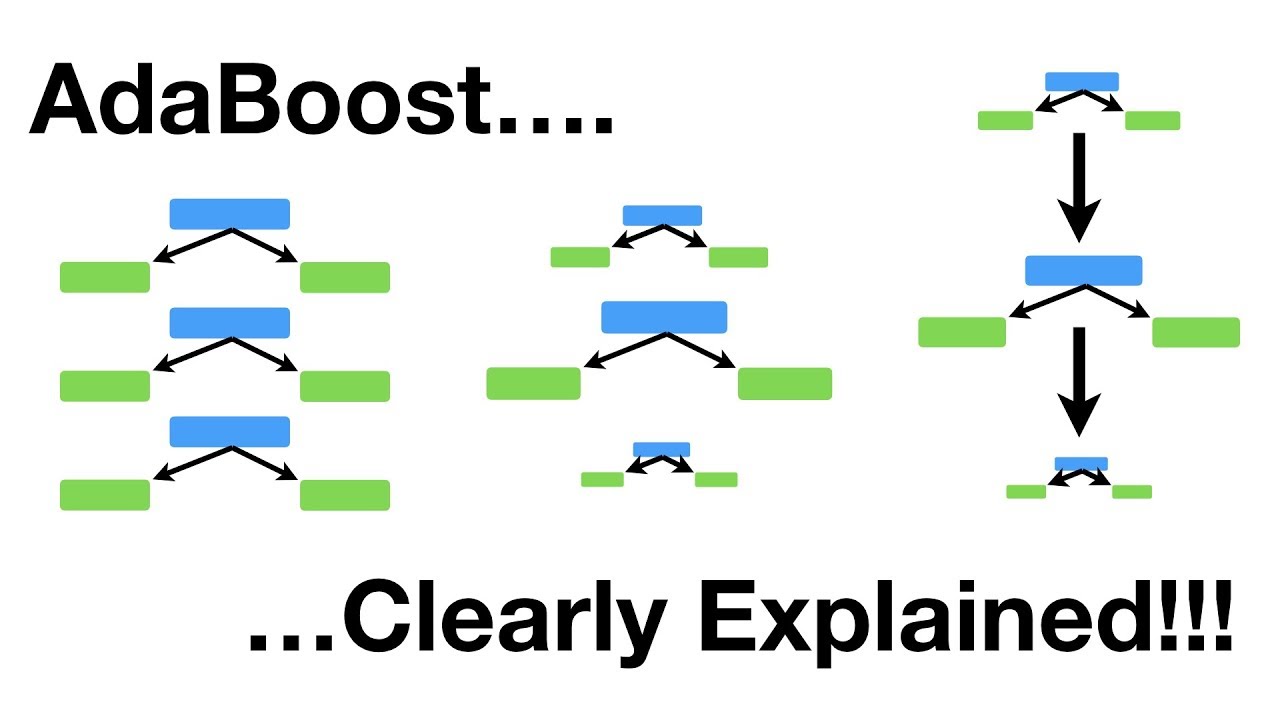

The aim of AdaBoost is to reduce the error rate of a classifier while maintaining a low computational cost. It works by combining multiple “weak” classifiers into one “strong” classifier. The algorithm repeatedly modifies the weights associated with each training instances in order to focus on difficult instances, which the weak classifiers classify incorrectly. The final strong classifier is a combination of the weak classifiers.

AdaBoost is one of the most popular and successful machine learning algorithms. It is a powerful approach to classification problems and is used in many real-world applications such as remote sensing, object recognition, and medical diagnosis. It is computationally efficient and can be deployed for online learning tasks. Additionally, it is a fast learning algorithm and is less prone to overfitting, making it robust to noisy data and capable of being used for large data sets.

AdaBoost can be applied to both classification and regression problems. It is also known for its ability to constantly improve over time, as the algorithm adapts to changing environments.