CycleGAN is a type of generative adversarial network (GAN) used in unsupervised machine learning tasks. The network was developed by Erik Meijer and Jun-Yan Zhu from UC Berkeley in 2017. It was designed to learn image-to-image translations without the need for any paired input data.

Unlike other GANs, CycleGAN can be used to map images from one domain to another without a fixed set of input images from each domain. This is possible due to learning from images from both domains simultaneously, as opposed to “paired” datasets, which are typically used in other GANs. This helps bridge the gap between two dissimilar image domains, allowing for a more complex image generation task.

The CycleGAN model consists of two components: a generator and a discriminator. The generator takes in an input image from either domain and produces an output image in the other domain. The discriminator then evaluates the output image and decides if it is a real image from the other domain or a generated one from the generator.

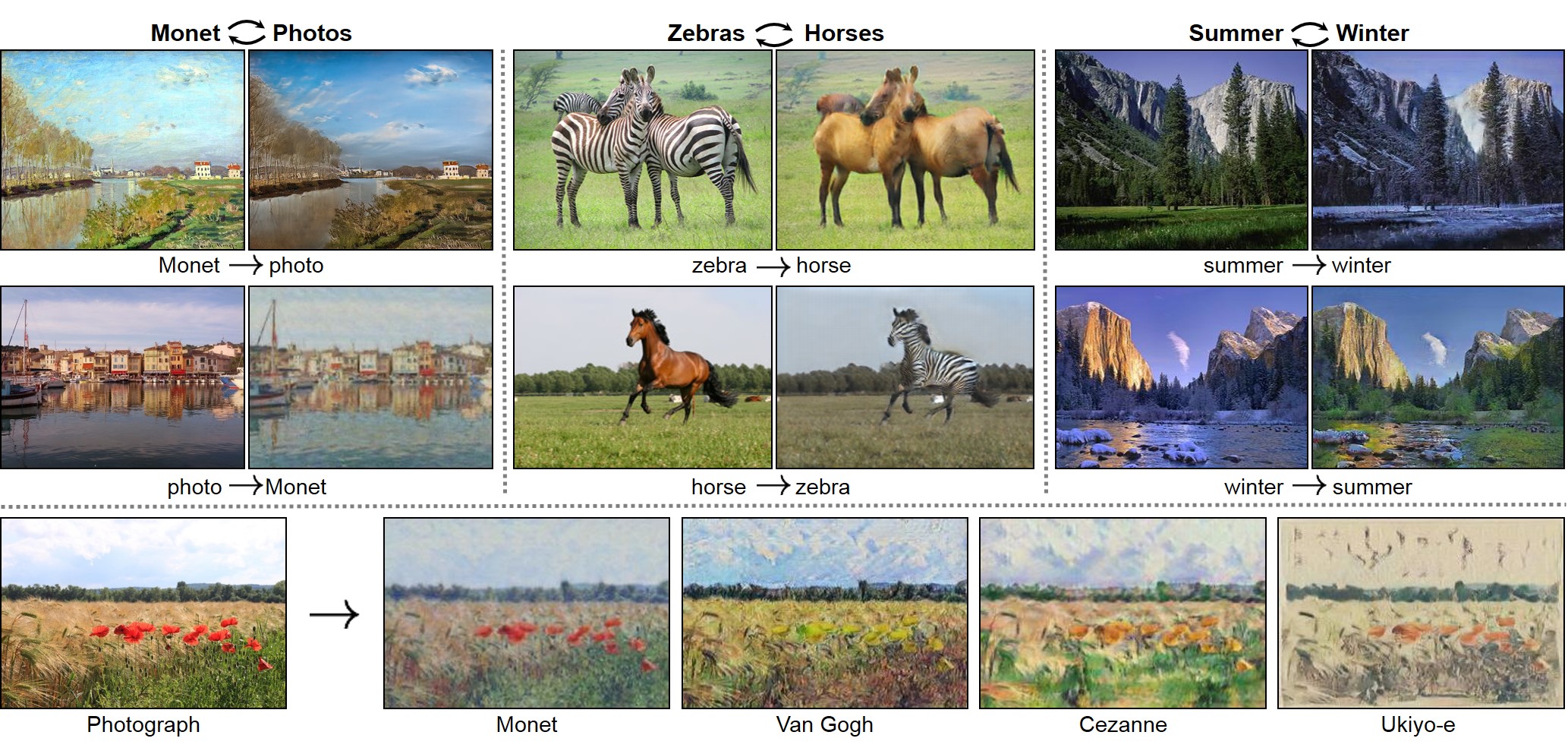

An example application of CycleGAN is image-to-image translation, allowing an image to be converted from one domain into a different image in another domain. For example, CycleGAN could take a photo of an apple and convert it into a watercolor painting of an apple. This application is especially useful in computer vision and robotics, where complex but visually similar data transformations are needed for tasks such as object recognition.

Since its introduction in 2017, CycleGAN has been used in a variety of tasks, from image-to-image translation to text-to-image synthesis. Its revolutionary unsupervised approach to learning complex transformations makes it an effective tool for many applications.