Hyperparameter tuning is a process by which engineers adjust the parameters of a machine learning algorithm’s internal parameters to improve its accuracy and performance. It is an iterative process that involves trial and error, as the engineer will adjust the hyperparameter values and evaluate the resulting change in the algorithm’s performance.

Hyperparameters are different from the standard model parameters, which the machine learning algorithm learns while training on data. Hyperparameters are set by the engineer and remain constant throughout the algorithm’s training process. Examples of hyperparameters include the number of layers in a neural network, the learning rate for optimization algorithms, and the number of trees in a decision tree.

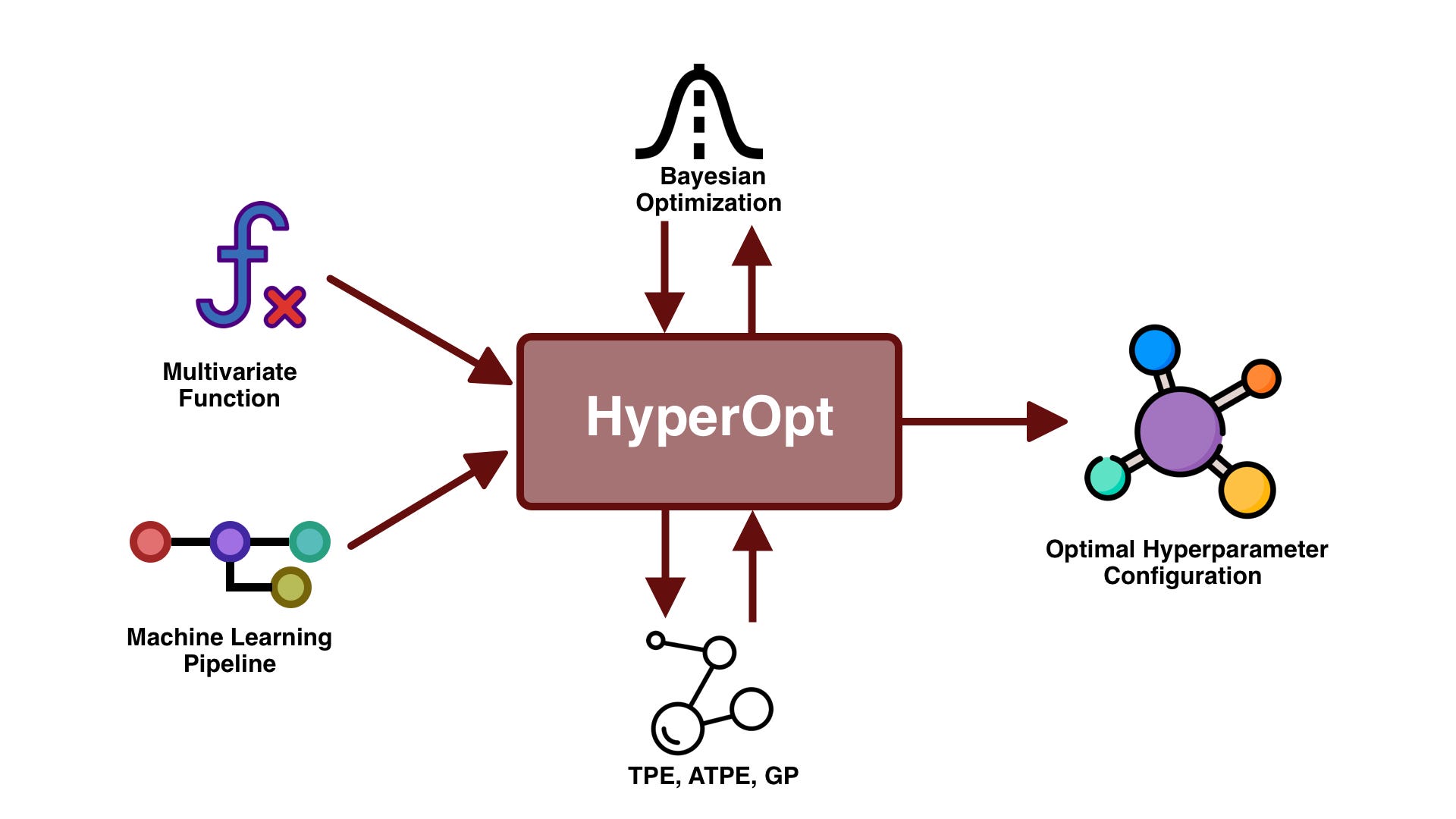

In the process of hyperparameter tuning, engineers try to find the optimal values of the hyperparameters by running the algorithm on training data multiple times. Each time the hyperparameters are fine-tuned and the resulting performance on the training data evaluated, allowing the engineer to identify the setting that will yield the best results on new data. This process is often referred to as “hyperparameter optimization”.

Hyperparameters are of particular importance when building machine learning models as they can significantly affect the performance of the end result. Therefore, hyperparameter tuning is an important step in the development of any machine learning algorithm and should not be overlooked.