Proximal Policy Optimization (PPO) is an algorithm in reinforcement learning that is used to optimize policies with respect to expected returns. It is a policy gradient method that is an alternative to Trust Region Policy Optimization (TRPO). The basic idea behind PPO is to encourage exploration while still encouraging exploitation.

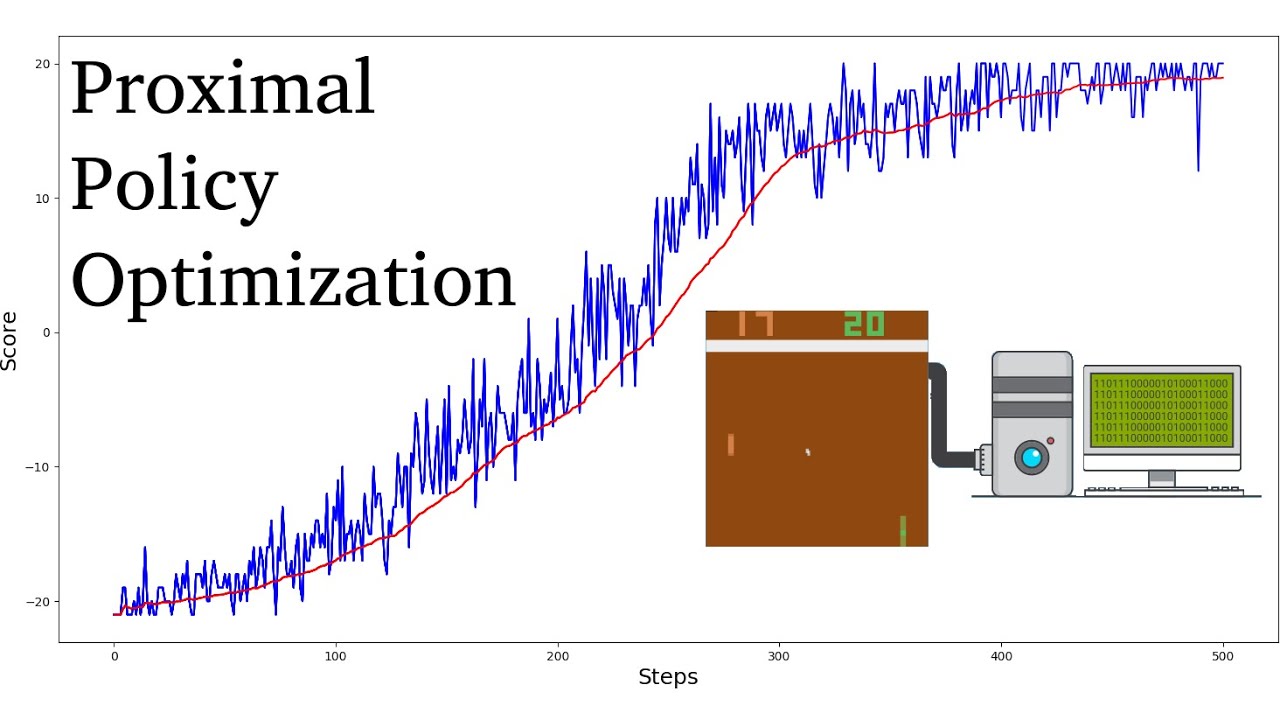

PPO seeks to find the optimal policy by performing an optimization step on the loss function of a given agent’s policy. The agent then selects actions based on this policy. This optimization allows the agent to make better decisions and therefore attain higher rewards from the environment.

The algorithm works by taking a sampled batch of episodes from an environment and computing the expected returns for that batch. It then uses the policy’s parameters to optimize the expected returns of the sampled batch, while also taking into account the constraints of the policy.

One of the advantages of PPO is that it can be used to train policies in multiple different environments, as the parameters of the policy are the same for each environment, meaning the same policy can be used for different environments. This makes PPO highly scalable and more generalizable than other reinforcement learning algorithms.

PPO has shown its ability to outperform other reinforcement learning algorithms in a variety of domains, including robotics, video game playing, and board game playing. It is an effective model-free reinforcement learning algorithm and has become more popular recently due to its scalability and ease of use.