Transformer-XL is an advanced natural language model developed by Google’s Research and Machine Intelligence division. It is based on the Transformer architecture, one of the fundamental components of recent language processing models. Transformer-XL is designed to address the problem of long-range dependencies in language tasks.

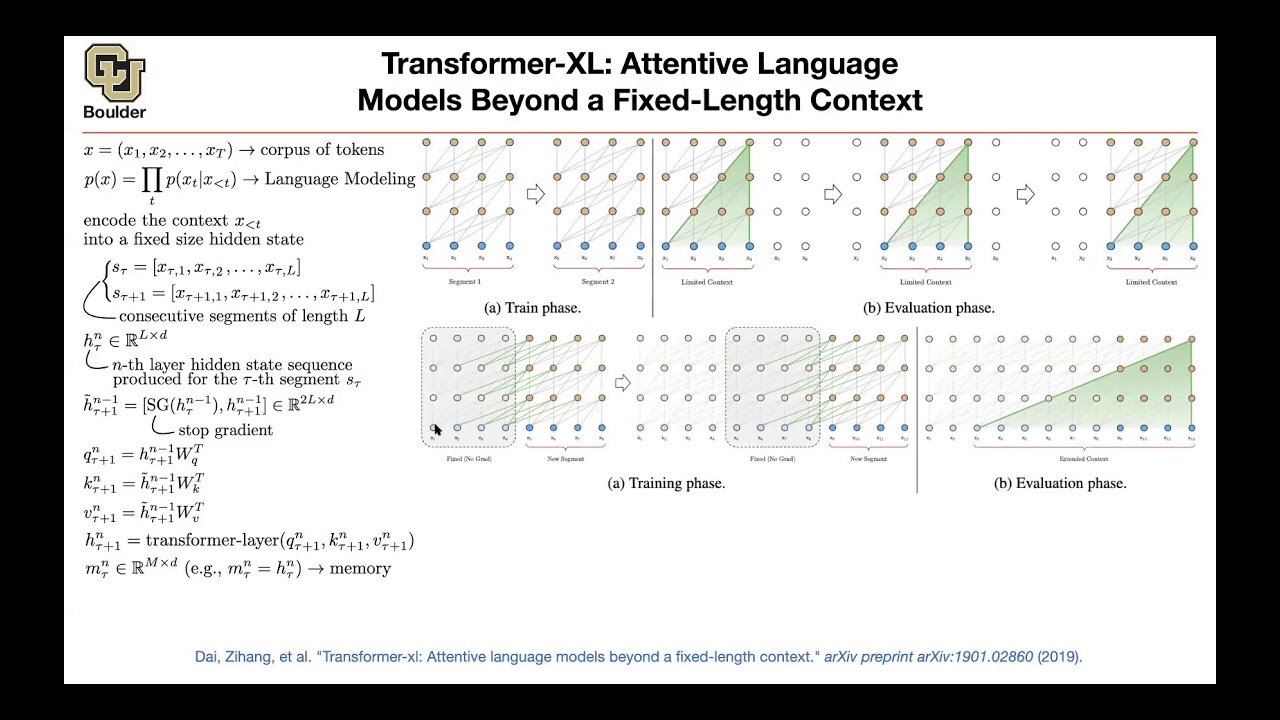

At its core, Transformer-XL is an autoregressive model, meaning that it predicts the current word given the sequence of words that preceded it. What sets this model apart from its predecessors is its use of segment-level recurrence and dynamic context length. The model uses an adaptive attention span to process a section of an input sequence before continuing on to the next. This allows Transformer-XL to capture long-term dependencies while using limited computational resources.

Transformer-XL has been used to break multiple records for accuracy in natural language tasks. It has been shown to effectively learn long-term dependencies in sentence representation and language inference. In addition, the model is significantly more efficient than some of its predecessors, enabling it to perform extremely long sequences in parallel without sacrificing accuracy.

The success of Transformer-XL has made it one of the most popular models for natural language processing tasks. As the model continues to be improved, it is likely that its usage in language-related applications will only continue to grow.