Gradient Descent is an algorithm used in machine learning and other fields to optimize an objective function. It is a first-order iterative optimization algorithm for finding a local minimum of a differentiable function. Gradient descent has been used to solve several optimization problems in data science, statistics, deep learning, and many other fields.

The algorithm is used to find a set of parameters (such as weights in a neural network) that minimize a given cost function. The algorithm minimizes the cost function using a downhill approach, beginning with arbitrary initial values for the parameters.

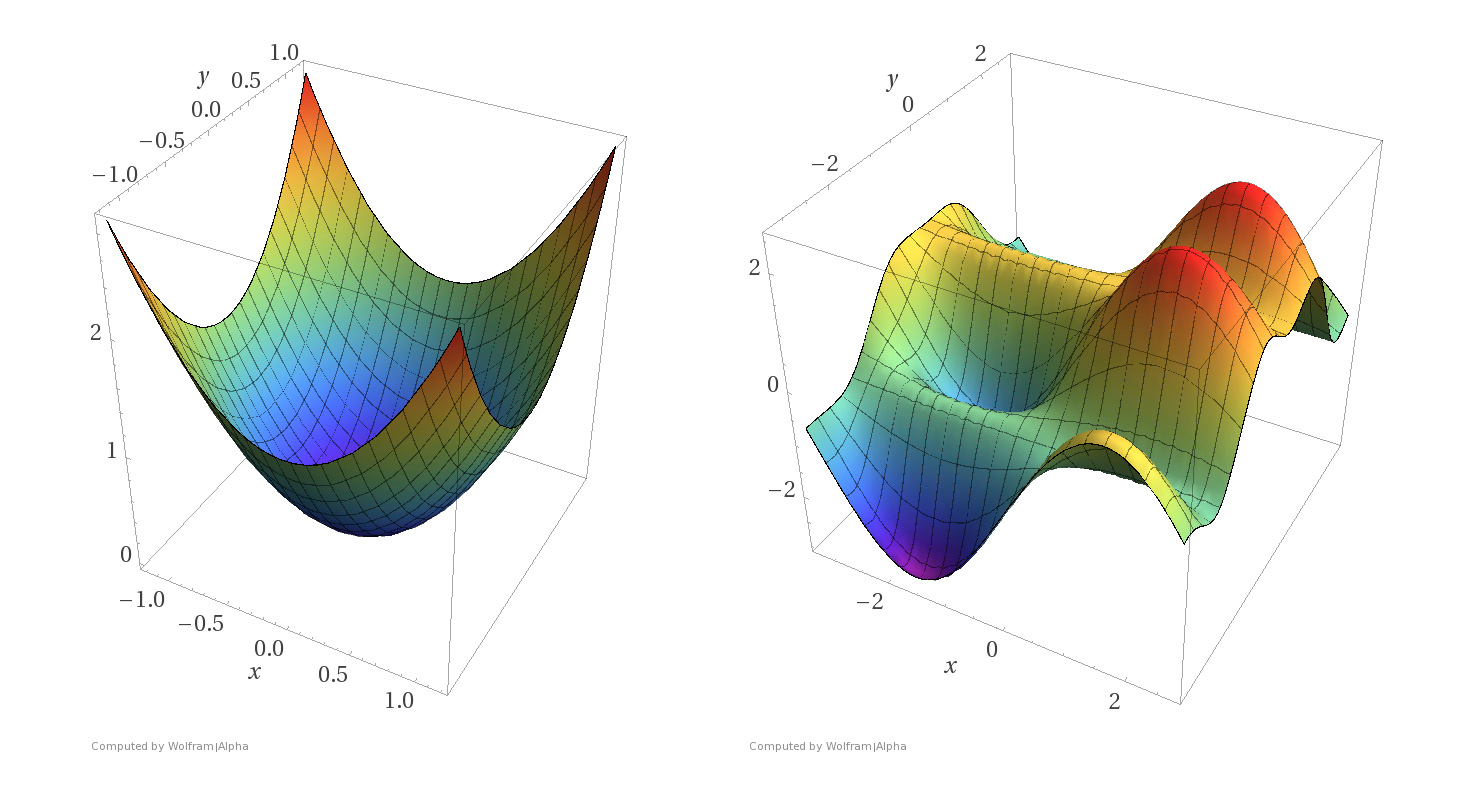

At each step of the process, the algorithm takes a step in the direction of the steepest descent, which is the direction of steepest decrease in the cost function. The size of this step is determined by a parameter called “learning rate,” which is typically adjusted until a satisfactory result is achieved.

The algorithm repeatedly takes these steps until it reaches a point where the cost function does not decrease any further, or, if passed an early stopping parameter, until an upper limit of iterations is reached.

Gradient Descent is widely used in various data science algorithms and has been embraced by the different sectors. In particular, the Indian Institute of Technology has developed applications such as for image processing, estimation problems, time series forecasting and machine translation. Gradient Descent is also used in deep learning to optimize weights in artificial neural networks.