Long Short-Term Memory (LSTM) is a type of Recurrent Neural Network (RNN) developed by researchers at Google Brain in 1997. It is a type of artificial neural network used in deep learning, specifically in natural language processing (NLP) and time series processing. It is an alternative to traditional RNNs, which suffer from the vanishing gradient problem.

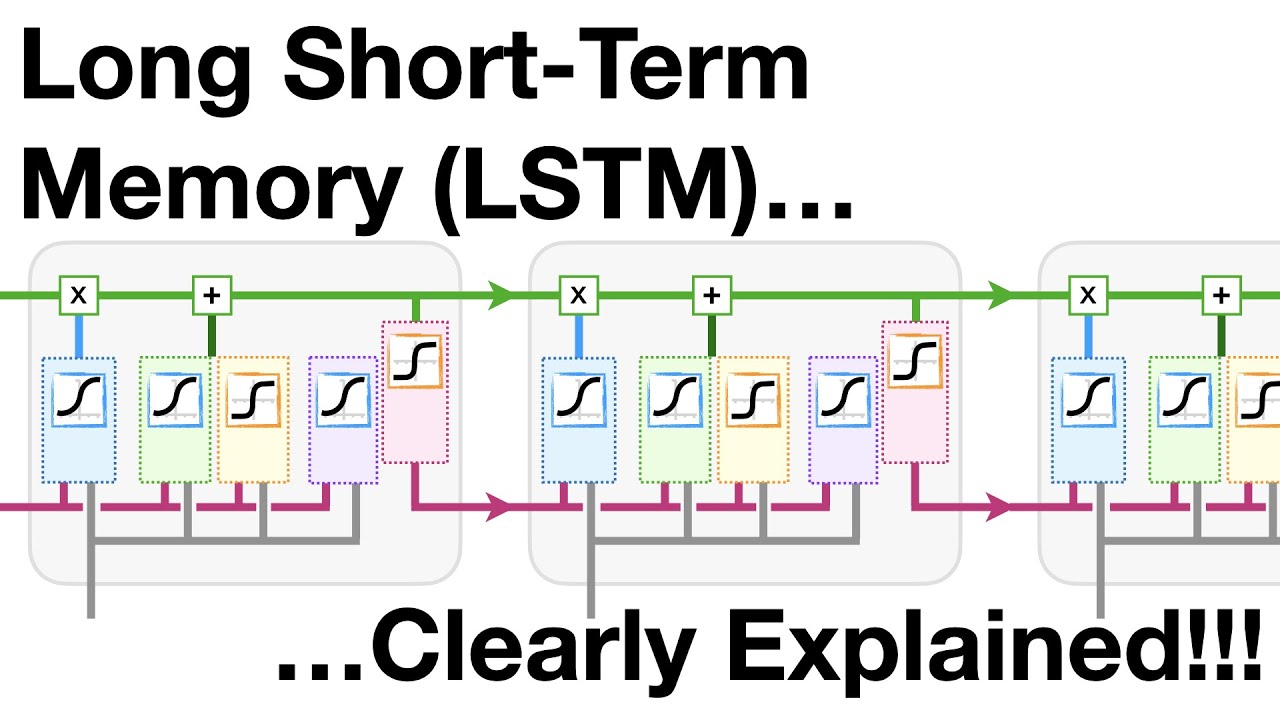

LSTM networks are composed of special structure called blocks, where each block has several layers of neurons. These neurons include an input gate, an output gate, and two recurrent gates that remembering values for a certain amount of time. This allows the network to remember information over a longer period of time. Specifically, each neuron within the network takes its input, then generates an output depending on its weights (i.e. the synaptic connections between neurons).

LSTM networks have been widely used in applications such as language processing, speech recognition, text-to-speech conversion, and natural language understanding. Thus, LSTMs are more effective for problems where a long-term memory is required.

In summary, Long Short-Term Memory (LSTM) is a type of Recurrent Neural Network that uses recurrent gates to store values over a long period of time, allowing it to be used for complex tasks such as natural language processing.