Normalization in Data Preprocessing refers to the process of normalizing data in order to make it more consistent and easier to use by computer systems. Normalization is a vital step in data preprocessing as it can help standardize data, resolving inconsistencies and reduce redundancies.

Data preprocessing is often done to prepare data for use with a machine learning system, and normalization is one of the most important components. It involves formatting the data in a consistent way, resulting in a more normalized form that is easier to read and interpret. Without normalization, data would become more complex and difficult for machines to process. Normalization helps ensure that the data is kept consistent and can be read more easily by the machine.

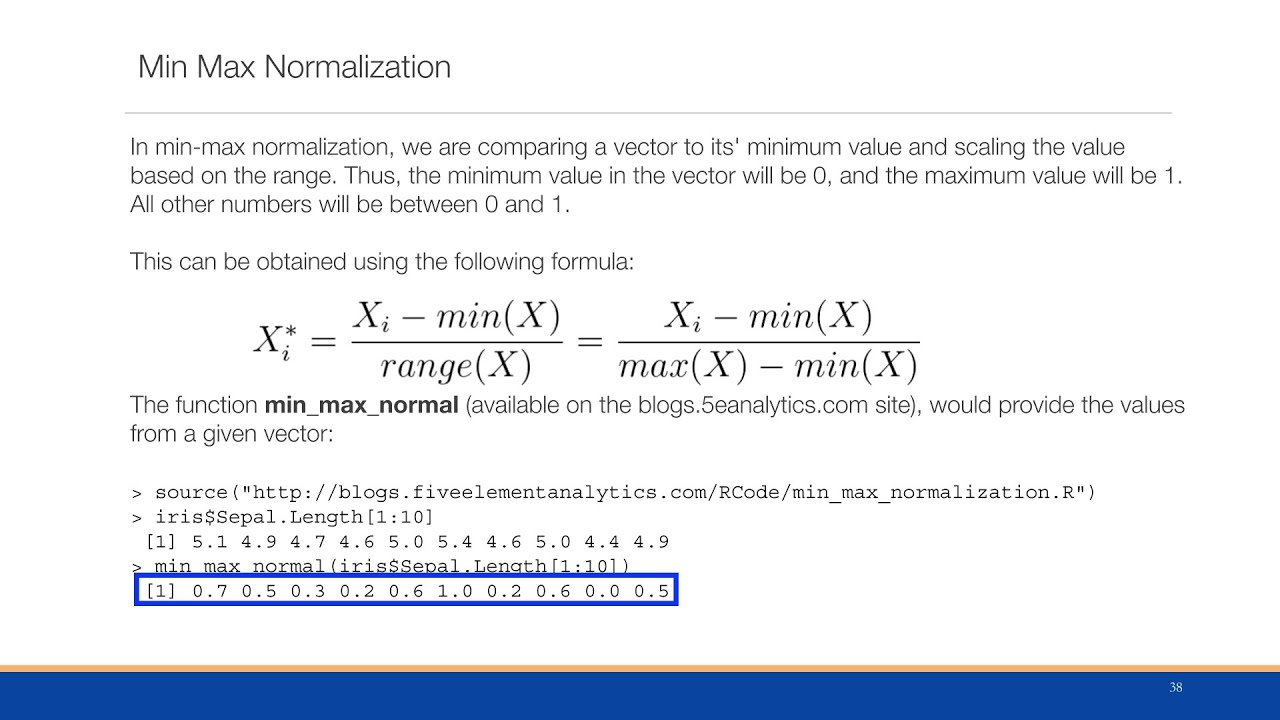

Normalization involves adjusting the values, ranges, and formats of the data so that it becomes more consistent. This can include adjusting the scale of the data, removing outliers, removing redundancies, filling in missing values, or grouping values.

Normalizing data can help improve accuracy when using machine learning models, such as helping reduce the effects of bias or increasing the speed of processing. It also helps ensure that the data is ready for efficient use in other applications.

Overall, normalization is a vital step in data preprocessing as it helps ensure data is in a consistent and uniform form. This uniformity makes the data easier to read, interpret, and use with machine learning models.