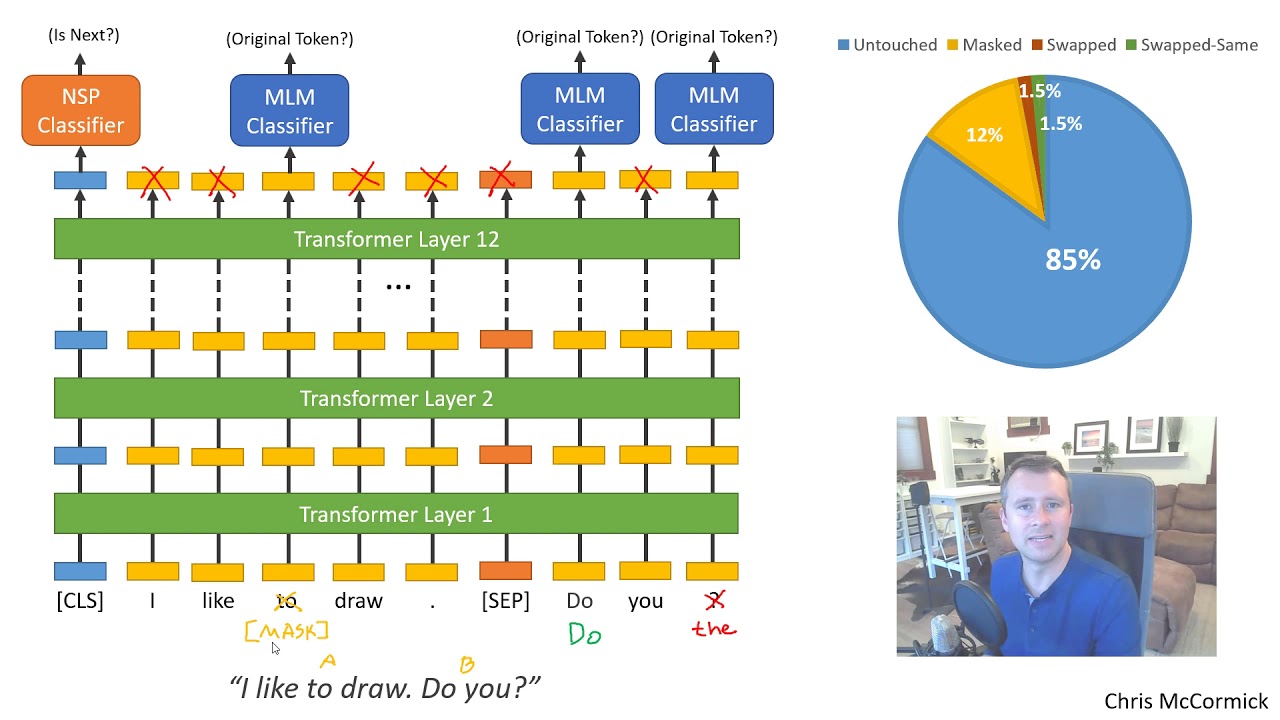

Masked language models are a type of natural language processing (NLP) algorithms used to solve some of the more difficult tasks in natural language understanding (NLU). In a typical masked language model, words in a given language are “masked” or “hidden,” and the model must “fill in the blanks” to guess the original words. This strengthens the model’s ability to understand the meaning behind the words and can be used to generate predictions on new data or for inference tasks.

The use of masked language models began in the mid-2000s and has grown in popularity with applications such as question answering, summarization, and sentiment analysis. The most popular masked language model is BERT (Bidirectional Encoder Representations from Transformers), which was released by Google in 2018. BERT is a deep learning-based method capable of learning language representations from unlabeled text by using a number of bidirectional “transformer blocks.” This results in an optimization of the weights in a given language model and leads to better performance in natural language understanding tasks.

Masked language models have also found use in industrial-level projects that require the understanding and generation of complex text. For example, the technology has been applied to more accurate customer service chatbots as well as personalized recommendations.

The technology is still in its early stages, but is growing rapidly. As more data becomes available and the accuracy of these models continues to improve, masked language models are expected to have a major impact in the future of natural language processing.